Highlights

If there was one unspoken mantra running through this DesignMeets x BDI panel, it was this: just because we can, doesn’t mean we should, and that’s exactly what makes this moment interesting.

AI doesn’t need empathy. Designers do.

We shouldn’t expect machines to be human. But we must expect humans to design with humanity in mind.

Three kinds of intelligence, one design practice.

We now get to work with individual intelligence, collective intelligence, and artificial intelligence – each bringing different strengths to the table.

Context is the new design frontier.

Without cultural and organizational context, AI tools just amplify assumptions or, worse, replicate harm. With the right framing, they can reveal patterns we’d never see alone.

The hype is real. The responsibility is too.

AI offers transformative power. Our job isn’t to resist it, but to adopt it thoughtfully so it doesn’t just scale bias or busywork.

Human-centred design needs better human-centred design thinking.

If we want ethical outcomes, we can’t just retrofit values onto the tech. We need to embed them in the teams.

Synthetic users can’t replace human stories.

AI-generated “participants” may help stress-test flows, but they can’t give us lived experience, unexpected behaviours or emotional weight. It can reflect the past but not reliably generate potential outcomes of the future, especially while the future of human experience is changing as we are living it.

A New Age of Design Tensions: Human, Machine, and the Messy Middle

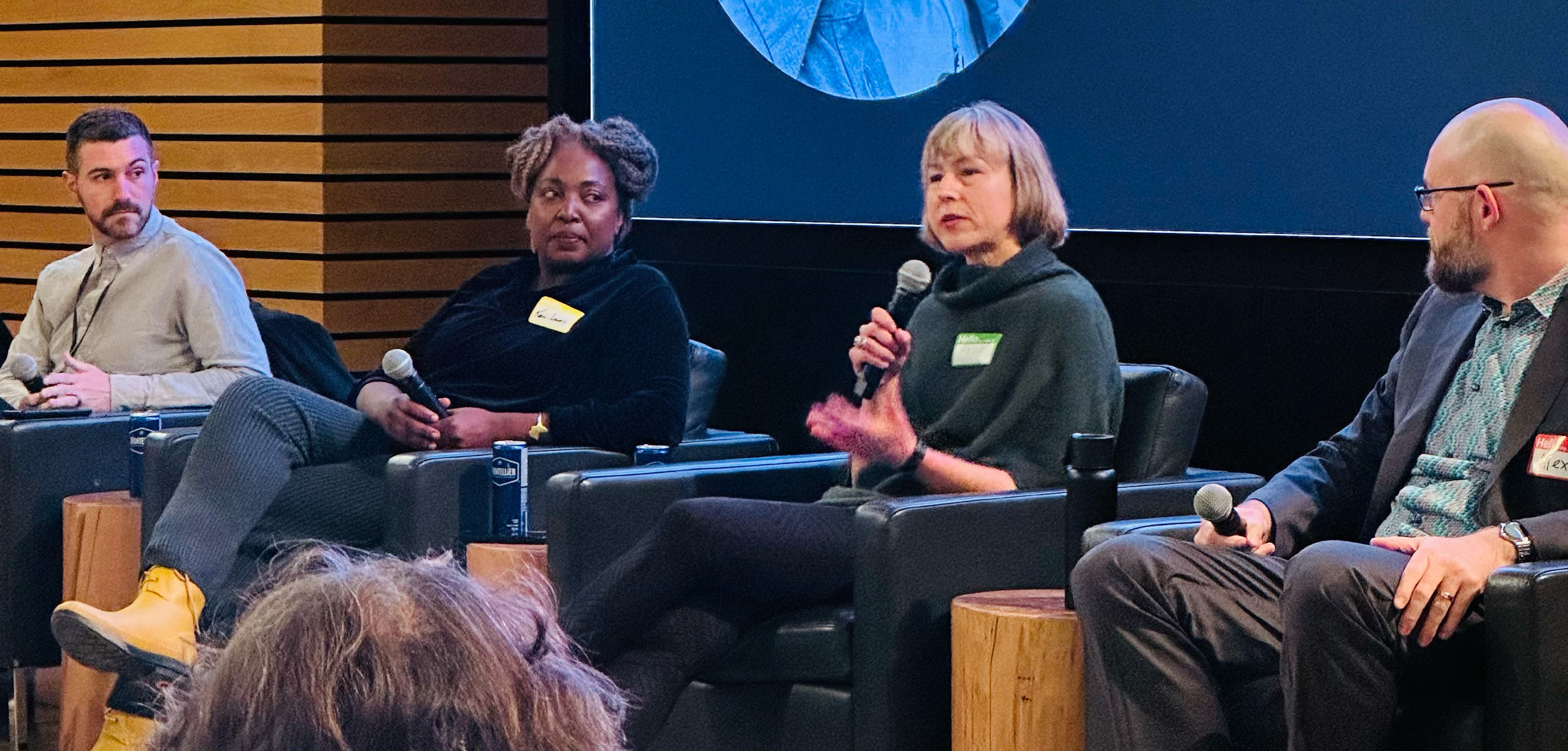

In a session full of nuance and curiosity, four design leaders took the stage to wrestle with the real implications of AI, not just what it can automate, but how it can elevate our work when we use it with intention.

The evening opened with DesignMeets founder Ian Chalmers situating the conversation in a decade-plus of events about how design shapes technology, culture and systems. This time, the question was: what does “human-centred” really mean when AI is embedded in everything we do?

Moderated by Emma Aiken-Klar and Michael Dila, the panel offered a mix of optimism and pragmatism. Nobody was anti-AI. The energy was more: this is here, this is powerful — so let’s get good at using it well.

Kem-Laurin Lubin cut through the optimism early:

“We’re clearly in a hype cycle, and we don’t yet have the language or leadership to handle it.”

That gap, she argued, makes it harder to talk about ethics in concrete ways and to challenge narratives that reduce everything to speed and efficiency.

Drawing from her work in research and teaching, Stefanie Hutka warned against treating AI as a neutral tool:

“Technology is never neutral. There are always trade-offs: what’s made easier, what’s left out, and who gets affected.”

Her focus wasn’t fear, but craft. If we don’t stay in the loop, design teams risk becoming passive amplifiers of whatever the model decides is “normal.”

Patrick Bach brought a big-organization perspective. Many companies, he noted, are under pressure to “do something with AI,” sometimes faster than they understand their own blind spots. The opportunity is real, but so is the need for better questions:

“We should be building systems that reflect the complexity of people’s lives, not sanding it down just because the model likes clean patterns.”

And Alex Ryan zoomed out with a systems-thinking lens shaped by work across governments and international organizations. For him, the point isn’t making AI magical; it’s making our use of it more deliberate:

The real question isn’t how to make AI more human. It’s how to make humans more humane in how we design AI.

These ideas hung in the room.

Three Kinds of Intelligence on the Same Team

One of the most useful frames of the night was the idea that designers now have three kinds of intelligence to work with:

- Individual intelligence – our own judgment, ethics, experience and craft.

- Collective intelligence – what emerges when teams and communities think together.

- Artificial intelligence – pattern recognition and generation at a speed and scale we can’t match.

The opportunity is not to pick a winner, but to weave them.

Alex described how this looks in practice. In co-design workshops, he’ll:

- Start with individual thinking (sketches, notes, Post-its).

- Move into collective sense-making (group share-outs, clustering, discussion).

- Bring in artificial intelligence to quickly synthesize and theme everything.

- Then come back to the humans to critique, refine and redirect.

Instead of AI quietly working in the background, it becomes a visible collaborator – another “person” in the room whose strengths and limits everyone understands.

Pixels, Post-its, and the Craft at Risk

A big tension running through the night was craft: what happens when AI starts doing the visible parts of design work?

Patrick was blunt:

“If your job is pushing pixels or arranging sticky notes, AI can already do a lot of that. The value of design has to move somewhere else.”

Enterprise-grade design systems and tools like Figma’s AI features mean product managers and non-designers can spin up plausible interfaces quickly. On the research side, AI can cluster interview notes, name themes and spit out neat diagrams in seconds.

Tools like Figma’s AI features and enterprise design systems mean product managers and non-designers can spin up decent interfaces very quickly. On the research side, AI can cluster interview notes and name themes in a neat diagram in seconds.

That’s convenient – and dangerous. If designers only ever see the AI-ready summary, they lose the muscle of sitting with raw stories, contradictions and edge cases. An audience member captured it perfectly:

“Are we the last generation that actually knows how to synthesize Post-its?”

Rather than reading this as a threat, the panel read it as a nudge:

- Let AI handle some of the mechanical work.

- Free designers to focus on framing, connecting dots, and exploring unintended consequences.

- Use AI to expand the option space, not to outsource judgment.

The panel’s answer was: only if we choose to stop practising. The tools don’t remove the need for critical thinking; they just make it more obvious where it’s missing.

Where Do Designers Fit In?

So what’s the designer’s role in all this? According to the panel, it’s not to chase every new tool. It’s to stay anchored in people, context and consequences – and to bring those three intelligences into conversation.

“We don’t need designers to become data scientists,” said Hutka. “But we do need them to ask better questions – and to be in the room early, before assumptions harden into roadmaps.”

In practice, that looks like:

- Questioning the data and proxies that models are built on

- Naming who is not in the dataset

- Challenging when “speed” is used as an excuse to skip participation

Alex described one way this is already changing practice: using AI during co-design workshops rather than after. Participants work analog first – sketches, Post-its, canvases – then the group digitizes, runs everything through AI for a first-pass synthesis, and critiques the patterns live.

What used to be weeks of solitary analysis becomes a shared sense-making moment where AI is a visible collaborator, not an invisible oracle.

Synthetic Users: Help or Harm?

One of the liveliest exchanges of the night came from an audience question about synthetic users — AI-generated “participants” used to test designs.

A researcher in the room had just heard “synthetic users” flagged as a top UX trend for 2026. Their worry:

“If I tell stakeholders some of my participants were real people and some were synthetic, does that build trust or destroy it?”

The panel saw real design space here, but also clear boundaries.

- Training junior researchers on interviewing and synthesis

- Piloting discussion guides or survey flows before going into the field

- Stress-testing information architecture when access to real users is extremely limited

But they drew a firm line at substitution:

- Synthetic users can’t offer lived context, body language, or that one surprising comment that changes the direction of a project.

- They can’t move a leadership team the way a real voice or painful usability clip can.

- To the undiscerning eye, synthetic “insights” can look polished and quantitative, while hiding shallow foundations.

The emerging consensus: synthetic users may become a useful supplement for certain tasks, but they are not a replacement for being in conversation with real humans — especially in ambiguous, high-stakes or equity-sensitive work.

Equity, Power, and the Global View

Kem-Laurin anchored the conversation in equity and geography. For many in the Global South, she noted, AI isn’t arriving as a clean slate – it’s landing on top of existing inequalities.

She pointed to hiring and health systems that use “neutral” fields like school or postal code as proxies that quietly map to race, gender or class.

“AI doesn’t scare me. What scares me is when we forget who we’re designing for, and who gets left out of the data.”

Her call to action for designers was not to walk away from AI, but to treat this as part of the brief:

- Break the GUI glass and look at the data, models and governance underneath.

- Question naming conventions and proxies that encode bias.

- Use our position between business, tech and community to push for systems that don’t just optimize for efficiency, but for dignity.

In other words, if AI is a new kind of intelligence on the team, designers have to be the ones asking how it behaves toward the most vulnerable people in the room.

Humility as a Design Tool

The panel didn’t end with tidy solutions. In true DesignMeets fashion, it ended with better questions:

- How do we design systems that resist bias instead of reproducing it?

- Who gets to define what “human-centred” really means when AI is in the loop?

- In the rush to partner with AI, are we outsourcing parts of ourselves we shouldn’t?

What emerged wasn’t cynicism, but a call for humility and skill-building. AI may accelerate innovation, but it also demands reflection, new literacy and a willingness to experiment in public.

Alex offered a closing “how might we” that brought the three intelligences back into focus:

“How might we design AI systems that clarify context, maintain diversity, reveal uncertainty, and strengthen critical thinking, agency and identity for everyone?”

The future won’t be built by code alone. It will be shaped by the values, tensions and trade-offs design teams choose to reckon with – and by whether we, as designers, show up as bridge-builders and sentinels, not just tool operators.

This article was recorded, transcribed, and edited by humans, machines and crowd edit. No AIs were harmed in the drafting of this article.

—

About Design Meets™

Proudly sponsored by Pivot Design Group, DesignMeets is a series of social events where the design community can connect, collaborate, and share ideas. Join us at a DesignMeets™ event to network, learn, and be inspired.

Sign up for our newsletter to stay in the loop.